Intro: The Curve No One’s Watching

In our previous blog, we broke down a large-scale study out of Denmark that tracked AI adoption across thousands of workplaces. The takeaway? ChatGPT and other LLMs are everywhere—but wages, hours, and employment stayed flat. Talent isn’t seeing the payoff yet.

But that’s not the whole story.

A new study by METR (Model Evaluation & Threat Research) introduces a different lens—one that doesn’t measure impact on labour markets but instead asks a more fundamental question:

How long a task can an AI system reliably complete, start to finish, without help?

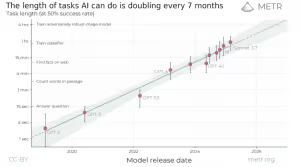

To answer that, the researchers proposed a new metric: the 50% task completion time horizon. This measures the typical length (in human hours) of tasks that an AI model can complete with at least 50% success.

According to their results, that number has been doubling every seven months since 2019. In 2025, it’s around 50 minutes. And if this growth trend holds, by the early 2030s we could see AI agents that can independently complete software tasks that currently take humans a full month.

So while the labor market hasn’t flinched yet, AI’s underlying capabilities are climbing fast—and quietly.

Let’s break down what this means.

2. The Metric in Practice: How Far AI Agents Have Come

To estimate the 50% success horizon, the METR team tested real-world tasks in software engineering and ML research—things that typically take humans anywhere from a few minutes to several days. These weren’t theoretical benchmarks. They were end-to-end tasks that required planning, tool use, adaptation, and persistence.

Examples included:

- Debugging a multi-step script

- Writing code that interacts with APIs

- Setting up and configuring ML pipelines

- Creating tools with basic documentation as context

In 2019, AI could only complete tasks that took less than a minute of human effort.

By early 2024, the best models crossed the 30-minute mark.

In 2025, models like Claude 3.7 and o1 are nearing 50 minutes.

And importantly, when agents do succeed, they often complete a 1-hour task in 1 to 10 minutes of compute time—far faster than a human would.

This isn’t about copying examples or following tightly-scripted steps. These agents operate with autonomy: they plan, fail, retry, use tools, and still finish.

The curve is steep. And it hasn’t slowed down. Based on trends going back to 2019, the researchers found a clear exponential pattern:

The 50% time horizon has been doubling every 7 months.

If that continues, by 2029–2031, AI agents could reliably complete software tasks that currently take a skilled human an entire work month—with no human in the loop.

3. What’s Driving the Curve?

The study didn’t just track outcomes—it looked at behavior. And that’s where things get interesting.

Six agents were tested: GPT-4, Claude 2.1, Claude 3.0, Claude 3.5 (Opus), Claude 3.7, and o1—a fine-tuned Claude 3.5 variant developed internally by METR. The real gains didn’t come from minor tweaks. They came from how the best models handled complexity.

Claude 3.7 and o1 stood out. Here’s why.

They didn’t panic when something failed

Older models tend to spiral when they hit an error—looping, repeating, or freezing. Claude 3.7 and o1 did something different. They paused, adjusted, and tried again. This made them far more reliable on multi-step tasks.

They used tools like they knew what they were doing

All agents had access to tools like the shell, file system, and web search. Stronger models used them with purpose. Instead of guessing syntax or fabricating solutions, they searched for real documentation, executed cleaner commands, and avoided detours.

They stayed on task

A common failure mode in older models: wandering. Starting one thing, switching to another, and never finishing either. The top agents in this study reduced that behavior dramatically. They moved through each step with more structure and fewer distractions.

They finished cleanly

Some models don’t know when the job is done. They overgenerate, re-run steps, or break working code. Claude 3.7 showed stronger “task finalization”—producing the right output, confirming it worked, and stopping. That alone boosted its success rate.

They managed context better

Long tasks often require switching between tools, editing multiple files, or jumping between sub-goals. That’s where weaker agents lost the thread. Claude 3.7 and o1 held context longer, made fewer mistakes across tool boundaries, and stitched together more of the workflow.

These changes aren’t obvious in benchmarks—but they’re easy to spot in the transcripts. The best agents weren’t just more accurate. They were more deliberate. They acted like they had a plan.

That’s what’s pushing the curve forward.

4. The Shift in How AI Behaves

What this study captured goes beyond answering questions or writing snippets of code. It observed something else: agents that could carry out full workflows on their own.

The models weren’t relying on prompt-by-prompt guidance. They ran independently. They used tools when needed, adapted when something failed, and made decisions about how to proceed. In many cases, they completed tasks end-to-end without human input.

That’s not just helpful. It’s operational.

The study didn’t frame this as general intelligence or breakthrough autonomy. But what it documented is meaningful: systems that can stay on track, interact with complex environments, and produce working results—without being micromanaged.

When the task completion horizon reaches 50 minutes, it already covers large portions of day-to-day software work. Add a few years of growth at the current pace, and agents will be capable of completing projects that span entire work weeks or longer.

This isn’t a hypothetical shift. The agents in this study ran those tasks, passed them, and did so under measurable conditions.

And the systems that pulled it off are already here.

This Is the Metric That Matters

We don’t need another benchmark leaderboard. We need to know when a model can take a task, run it start to finish, and ship something usable. This METR study that we have analyzed takes a different route and gives a concrete answer.

Instead of grading AI on trivia questions or math word problems, it measures task durability—how far a model can go before it breaks. It’s not a test of what the model knows. It’s a test of whether it can finish something useful without being hand-held.

At Solveo, we’ve already built workflows where AI handles full tasks: market research, content drafts (like, for example, a draft for this blog), internal evaluation, briefings…But we’ve still designed those systems around human checkpoints.

That won’t last much longer, I guess.

Claude 3.7 and o1 are showing what happens when agents can run tasks solo. Not assist—run. If your internal systems still rely on manual review at every step, you’re going to hit a ceiling that the models will bypass.

The time horizon metric tells us when the handoff becomes real.

We’re tracking it closely—not for the hype, but because it’s already forcing us to rethink how we scope work, allocate roles, and build around output instead of process.

Ignore this curve, and you’ll be stuck managing workflows that the next wave of AI will already be completing on its own.

.svg)